Have you ever wondered if you can tell if AI wrote something? According to recent news, OpenAI, one of the leading AI research labs, has developed a new text completion model that is so advanced it’s virtually indistinguishable from human writing. In other words, it’s getting harder and harder to tell if a person or an AI wrote that blog post or social media comment.

MSN, a popular web portal launched by Microsoft in 1995, offers a wide range of internet services and content. It has become a go-to website for many users, providing news, weather updates, sports coverage, entertainment articles, and even a search engine. In addition, MSN offers email services, online gaming, and chat forums, making it a one-stop destination for internet needs.

One of the reasons why MSN has gained such popularity is its ability to cater to individual interests and preferences. With the option to personalize the portal, users can easily access the content they care about the most. Whether you’re interested in staying up-to-date with the latest news, finding a recipe for the perfect dinner, or engaging in online discussions, MSN has got you covered.

In our upcoming article, we’ll delve deeper into how OpenAI’s advancements in AI writing are changing the landscape of online content generation and how MSN continues to be a reliable and versatile platform for users worldwide. Stay tuned to learn more about the future of AI-generated content and explore the diverse offerings of MSN.

Introduction

OpenAI, a leading artificial intelligence research lab, has been at the forefront of developing powerful text-generating models. These models, such as their groundbreaking GPT-3, have been recognized for their impressive language generation capabilities. However, with the increasing prevalence of AI-generated content, concerns have arisen about the ability to differentiate between human-written and AI-written text. In this article, we will explore OpenAI’s mission, the advancements in AI technology, the challenges in identifying AI-generated text, and the implications and ethical considerations surrounding this issue.

What is OpenAI?

Definition

OpenAI is a research organization that aims to ensure that artificial general intelligence (AGI) benefits all of humanity. It was founded by Elon Musk, Sam Altman, Greg Brockman, Ilya Sutskever, Wojciech Zaremba, and John Schulman. OpenAI’s primary focus is on developing safe and beneficial AI and promoting adopting these technologies across various industries.

Overview of OpenAI’s Mission

OpenAI’s mission is to ensure that artificial general intelligence (AGI) benefits everyone. They aim to build AGI that is safe and beneficial and to distribute its benefits broadly. OpenAI commits to using their influence to avoid enabling uses of AI or AGI that harm humanity or unduly concentrate power. Their mission emphasizes long-term safety, technical leadership, and cooperation with other research and policy institutions.

AI-generated Content

Advancements in AI Technology

The rapid advancements in AI technology have led to the emergence of powerful text-generating models, including OpenAI’s GPT-3. These models can produce human-like text, mimicking writing styles and context. They are trained on enormous datasets and can generate coherent paragraphs, essays, and even creative pieces such as poetry.

Impact on Content Creation

The rise of AI-generated content has profoundly impacted various domains, including journalism, blogging, customer support, and creative writing. AI-generated articles and blog posts can save time and resources for companies and individuals. However, it also raises concerns about the authenticity and transparency of the content generated by AI models.

OpenAI’s Text Generating Models

GPT-3: A Breakthrough in Language Generation

OpenAI’s GPT-3 (Generative Pre-trained Transformer 3) is a language model that has gained significant attention for its impressive language generation capabilities. With 175 billion parameters, GPT-3 is one of the largest and most powerful language models. It can perform various language tasks, including translation, summarization, question-answering, and even creative writing.

Capabilities and Limitations

While GPT-3 has demonstrated remarkable language generation abilities, it also has limitations. The model can occasionally produce nonsensical or biased content. It heavily relies on the existing data it was trained on, which means it may not always generate accurate or reliable information. Moreover, GPT-3 can sometimes struggle with context and coherence, leading to inconsistencies or irrelevant responses.

Identifying Generated Text Written By AI

Challenges in Distinguishing AI and Human Writing

As AI-generated text becomes more sophisticated, the challenges in distinguishing it from human writing have increased. AI models like GPT-3 have the ability to mimic writing styles and formatting, making it difficult to differentiate their output from that of a human. Additionally, AI-generated text can be contextually relevant and semantically accurate, further blurring the lines between human and AI contributions.

Testing and Evaluation Techniques

Identifying AI-generated text requires the development of effective testing and evaluation techniques. Researchers and developers have been exploring various approaches, including adversarial testing, linguistic analysis, and statistical methods. These techniques aim to uncover patterns and characteristics specific to AI-generated text and distinguish them from human-written content.

OpenAI’s Claims and Challenges

OpenAI’s Statement on Identifying AI Text

OpenAI acknowledges the challenges of identifying AI-generated text and is committed to addressing this issue. In their “Statement on Identifying AI Text,” they state that they will invest in research and engineering to improve the transparency and accountability of AI-generated content.

Issues with Detecting AI-generated Content

Despite OpenAI’s commitment, significant challenges exist in reliably detecting AI-generated content. Existing models can generate text that appears convincingly human-like, making it difficult for humans and automated tools to distinguish between them. This poses a potential risk in terms of misinformation and deceptive practices.

Current State of Identifying AI-generated Text

Research Findings

Extensive research is being conducted to improve the identification of AI-generated text. Some studies have proposed novel detection techniques based on statistical analysis, while others focus on linguistic features and pattern recognition. These approaches aim to strengthen the ability to discriminate between AI and human-generated content.

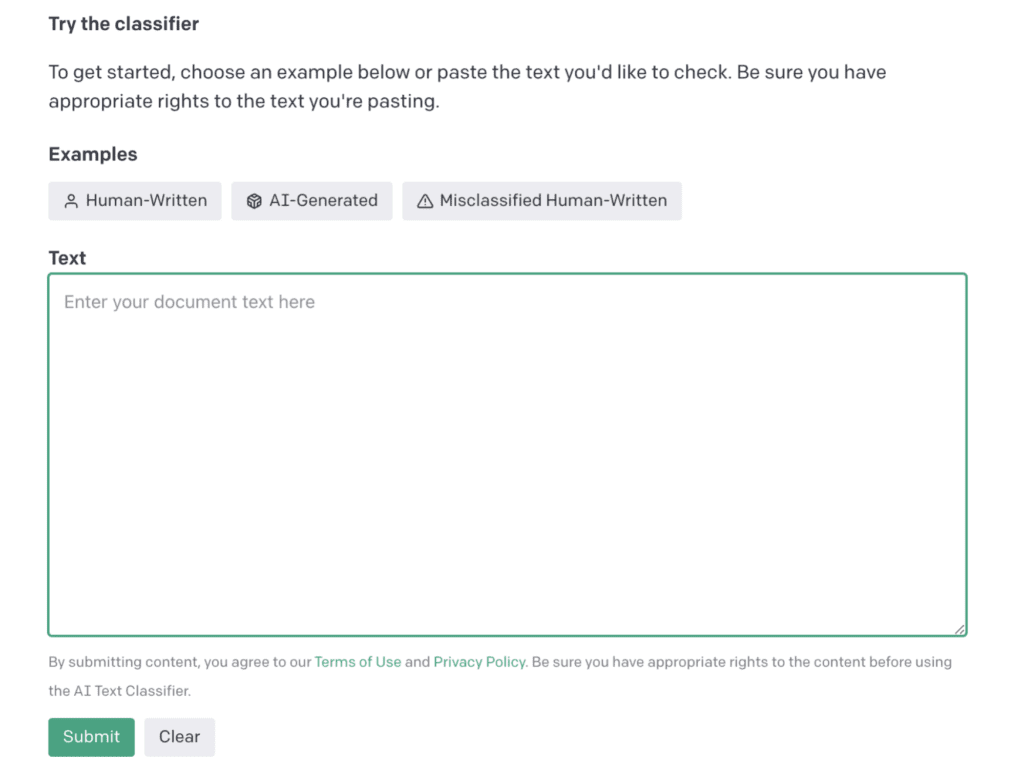

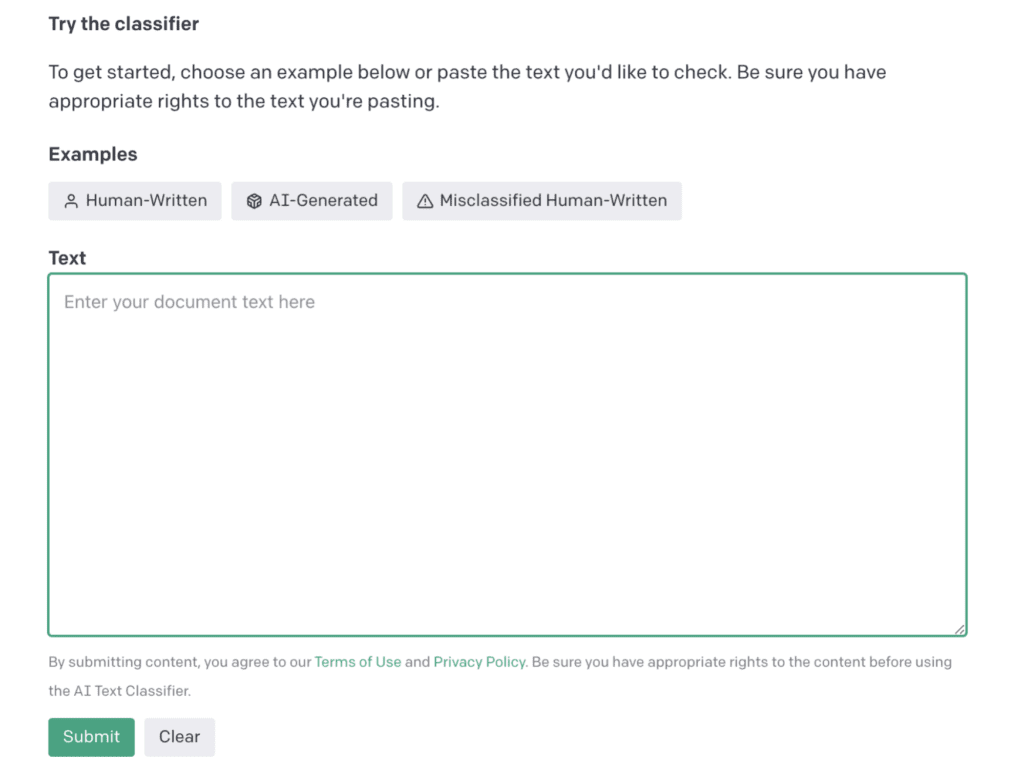

Existing Tools and Solutions

Several tools and platforms have been developed to assist in identifying AI-generated text. These tools utilize a combination of manual evaluation techniques and automated analysis. While they provide valuable insights, they are not foolproof and require continuous improvement to keep up with the evolving capabilities of AI models.

Evaluation Methods

Manual Evaluation Techniques

Manual evaluation techniques involve human reviewers assessing and scrutinizing text for indicators of AI generation. Reviewers can analyze coherence, logical flow, stylistic elements, and nuanced contextual understanding. However, this approach is time-consuming, subjective, and may vary in accuracy depending on the reviewer’s expertise.

Automated Detection and Analysis

Automated detection methods leverage machine learning algorithms and linguistic analysis to identify AI-generated text. These techniques aim to detect patterns, anomalies, or statistical variations specific to AI models. However, they face challenges in keeping up with the constantly evolving landscape of AI technology, requiring regular updates and adaptation.

Implications and Ethical Considerations

Misinformation and Deceptive Practices

The inability to reliably identify AI-generated text raises concerns about the spread of misinformation and deceptive practices. AI models can be exploited to disseminate false or misleading information, exacerbating the issue of misinformation and potentially leading to social and political consequences. This calls for increased awareness, scrutiny, and responsible use of AI-generated content.

Concerns over AI-generated Influence

AI-generated content has the potential to shape opinions, influence decisions, and impact public discourse. If individuals are unaware that they are interacting with AI-generated text, it undermines transparency, accountability, and the ability to make informed choices. This highlights the importance of creating mechanisms to differentiate AI-generated and human-generated content.

Conclusion

While OpenAI continues its efforts to improve the identification of AI-generated text, the challenges in reliably distinguishing it from human writing persist. The emergence of powerful language models like GPT-3 highlights the need for robust evaluation methods and the responsible use of AI-generated content. As AI technology evolves, addressing ethical considerations and ensuring transparency, authenticity, and accountability in AI-driven content creation is crucial. Only then can we harness the potential benefits of AI while mitigating the risks associated with misinformation and deceptive practices.